Since Keywee was founded, we’ve prided ourselves in using cutting-edge AI to drive success for our customers. We use a combination of Machine Learning and Natural Language Processing Models to inform everything from audience targeting to post text generation. We’re constantly running tests to see what works and what doesn’t.

Recently we ran an interesting experiment with post text and AI, that I think anyone running ads on social will find useful and enlightening. That’s what I want to talk about today, so let’s dive in.

More Than a Buzzword

Confession time: Before I came to Keywee I was fairly dismissive of AI for a couple of reasons. First, it seemed like every Tom Dick and Harry in the tech world claimed to use it. Second, most of the real-world applications I saw that claimed to use it were underwhelming at best. In a previous role I’d seen several attempts at using AI to generate text, and the results were usually grammatically incorrect nonsense. Adding to that the cultural references from Skynet to the Matrix, I basically put my fingers in my ears and walked on.

My mind has definitely been changed in the last year. The advances that Keywee has harnessed to inform our technology have made it so that we can generate text that is effective, while also being able to predict its performance relatively well. It’s made me a believer.

We consistently run sanity tests to make sure our models work, and we ran a test recently that wasn’t only helpful for us, I think it’s helpful for anyone wanting to create effective post text (whether that’s using AI or just your own wits and creativity). In all earnestness, it also taught me a bit about both the potential and the limitations of AI.

Setting the Stage

Our AI has been trained on millions and millions of social media posts, so the assumption is that it should know both what to write, and how well that writing will do. For this test, we specifically wanted to see whether we were good at predicting performance. Our performance predictor basically gives post text a score between 0 and 100. The higher the score, the better it should perform.

We chose 30 articles, ranging from sports, to cooking to politics, and ran A/B tests on those. The only variable we changed was the post text. The headlines, images, targeting, and bidding remained the same. In short – the test was run in a completely sterile environment.

For each article, we tested two post texts with a difference of at least 20 points between them. So a low performer with a grade of, say, 40 would compare to text with a grade of 60 or higher.

For example, an article about hiking horror stories, we used these texts:

Post text: You never know what lies beyond the woods.

Predictive Performance Score: 59

—

Post text: These stories are terrifying.

Predictive Performance Score: 95

We wanted to see if our grading system held up, and to hopefully learn more about what texts work and what texts don’t. We used CPC and CTR as indicators.

Low CPC = Win

High CTR = Win

Key Takeaways

Let’s start with the headline from the results: Post texts with the higher performance score had an average of 18% higher CTR, and 14% lower CPC.

Looking at the example from above, the actual result was that CPC was about 12% lower on the high-scoring text, and CTR was about 11% higher.

We also wanted to truly put our AI through the paces, so we gave the post texts to our own team – who are masters at Facebook – to predict which ones they thought would do better. They got it right about 50% of the time – a coin toss. The AI did measurably better.

Here’s my key takeaway here: AI is not perfect. It will probably never be able to write text that is as good as a talented human writer will. However, when it comes to taking data and turning it into something coherent and useful – there is nothing better. The Keywee team isn’t “worse” than the AI. The difference between them is that the Keywee team doesn’t have the ability to crunch millions of numbers and build out probability scenarios. We’re human, for better or worse. I see AI as a helping hand, never a replacement. Using AI for text generation should be akin to using photoshop to create an image. The human spark is still there.

Fun Facts From Our Findings

Other than the above, I wanted to mine the data to see if there was anything actionable that everybody can use to inform their own campaigns, AI or no AI. Here are a few things I discovered:

- Post text matters! I mean, we knew that because otherwise we wouldn’t have built a whole AI infrastructure around it, but it’s always a good reminder. 🙂 The top performing posts had a CPC that was 14% lower on average, and a CTR that was 18% higher on average. These types of margins could be the difference between a successful or unsuccessful campaign, no matter what business goal you’re optimizing for. If you’re not testing multiple variations of post text, you really should be.

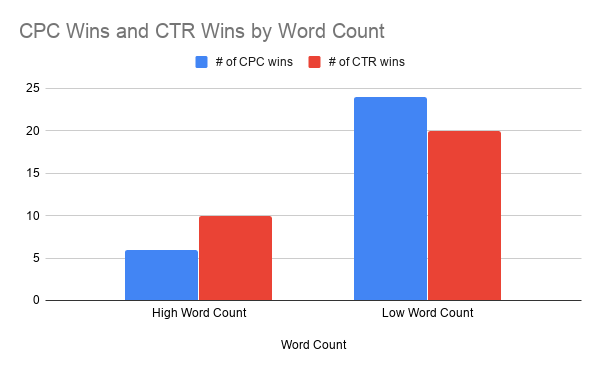

- Word count matters! There are different schools of thought when it comes to post text. The test we ran definitively proved that shorter texts performed better. For our purposes, “short” was around 5 words on average. Of course, there will be situations where more is better, which is why A/B testing continuously is so important.

- When it comes to content, lose the CTAs. About ⅓ of the text variations had a call to action in them – i.e. “Read this”, “Learn how” and so on. Those lost out to more editorial texts 60% of the time.

I think it’s often easy to get complacent when it comes to post text, and even if we got some clear-cut conclusions, it doesn’t mean that they are set in stone. If nothing else, I hope sharing some of this data encourages you to test more often.

If you want to learn more about our text generation superpowers, check them out here.